Dec 29, 2025

What AI Models Can Teach Us About Human Bias in Investing

What can AI models teach us about human bias in investing?

They reveal how deeply emotions, assumptions, and structural incentives shape financial decisions, often in ways investors don’t realize.

By analyzing markets as systems built on human behavior, transparent AI models expose patterns like overconfidence, confirmation bias, and herd thinking, helping investors recognize bias instead of unknowingly reinforcing it.

For decades, the investment industry has treated bias as a personal failing. Something to be trained out of analysts through experience, discipline, or better incentives. But the rise of AI in finance has revealed something uncomfortable: many of the most persistent investment mistakes are not random or emotional. They are structural.

And when machines are trained on markets, they don’t just surface patterns in price or fundamentals. They surface patterns in human behavior. In doing so, AI models have become an unexpected mirror, reflecting how biased professional investing really is.

The myth of the rational investor

Modern finance is built on the assumption of rational actors. Yet behavioral finance has spent decades dismantling that idea.

Daniel Kahneman and Amos Tversky showed that investors systematically overweigh recent information, anchor on initial prices, and fear losses more than they value gains. Later research quantified the cost. A widely cited study by Barber and Odean found that overconfident investors trade more and earn significantly less than their peers. Another study from Dalbar showed that the average equity investor underperformed the S&P 500 by several percentage points annually, largely due to poor timing driven by emotion.

These findings are not limited to retail investors. Professional analysts, portfolio managers, and institutional committees are subject to the same cognitive shortcuts. The difference is that their biases are often disguised as process.

When AI learns from markets, it learns from us

AI models do not arrive unbiased. They are trained on historical data, which is itself the product of millions of human decisions. Fear during drawdowns. Euphoria during bubbles. Herd behavior around narratives. Anchoring to price targets. Confirmation bias reinforced by consensus.

When an AI model analyzes markets, it is effectively studying the aggregate psychology of investors.

This is why early AI investing tools failed to deliver on their promises. Many models simply optimized for prediction accuracy without exposing the assumptions behind their outputs. They produced confident signals, but not understanding.

As one former hedge fund CTO put it in an internal report leaked in 2022, “The model wasn’t wrong. It was just repeating the market’s existing biases faster than humans could.”

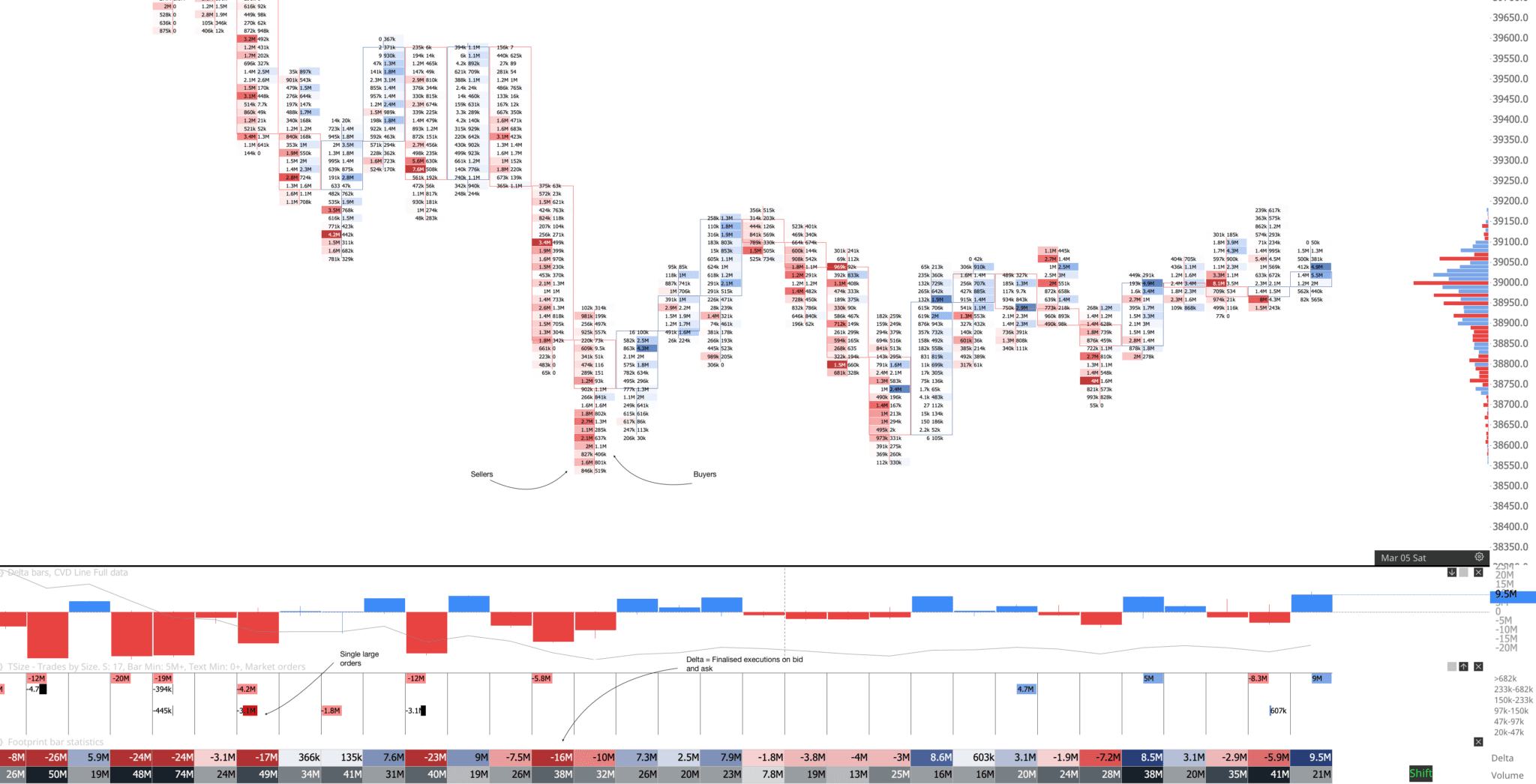

Source: TradingRiot

Bias is not eliminated by automation

There is a common misconception that AI is emotionless and therefore objective. In reality, AI is not emotionless. It is non-emotional. That distinction matters.

AI does not feel fear or greed, but it can amplify bias if its inputs are biased and its outputs are opaque. Black-box systems that average indicators or optimize for short-term accuracy often reinforce consensus thinking rather than challenge it.

This became evident during periods of market stress. During the 2020 COVID crash, many algorithmic systems rapidly de-risked in unison, exacerbating volatility. In 2022, models trained heavily on growth-era data struggled to adapt to a rising-rate regime, systematically overvaluing long-duration assets.

The lesson was clear. Removing emotion does not remove bias. It simply changes its form.

What AI reveals when designed for transparency

The most interesting insight from modern AI systems is not their ability to predict prices. It is their ability to show how decisions are formed.

When models are designed to expose reasoning, conflicts, and confidence levels, they reveal something human analysts often miss: bias usually appears as imbalance, not error.

For example, an AI system may show that a bullish recommendation is driven almost entirely by momentum while fundamentals are deteriorating. Or that a “strong buy” relies on valuation assumptions that only hold in low-rate environments. Or that sentiment indicators are dominating signals during late-stage rallies.

By separating inputs, weighting them explicitly, and tracking how confidence changes as data shifts, AI can make bias visible rather than hidden.

The difference between prediction and explanation

Most AI investing tools focus on outcomes. Buy. Sell. Hold. They optimize for decisiveness. But professional investors do not need decisiveness. They need clarity.

This is where explainable AI systems change the conversation. Instead of asking, “What does the model think will happen?” the better question becomes, “Why does the model think this, and how fragile is that view?”

Research from the CFA Institute has consistently emphasized that decision quality improves when analysts understand uncertainty ranges rather than relying solely on point estimates. AI systems that expose confidence decay, conflicting signals, and aging assumptions align far better with how professionals actually think.

How trade & tonic approaches bias differently

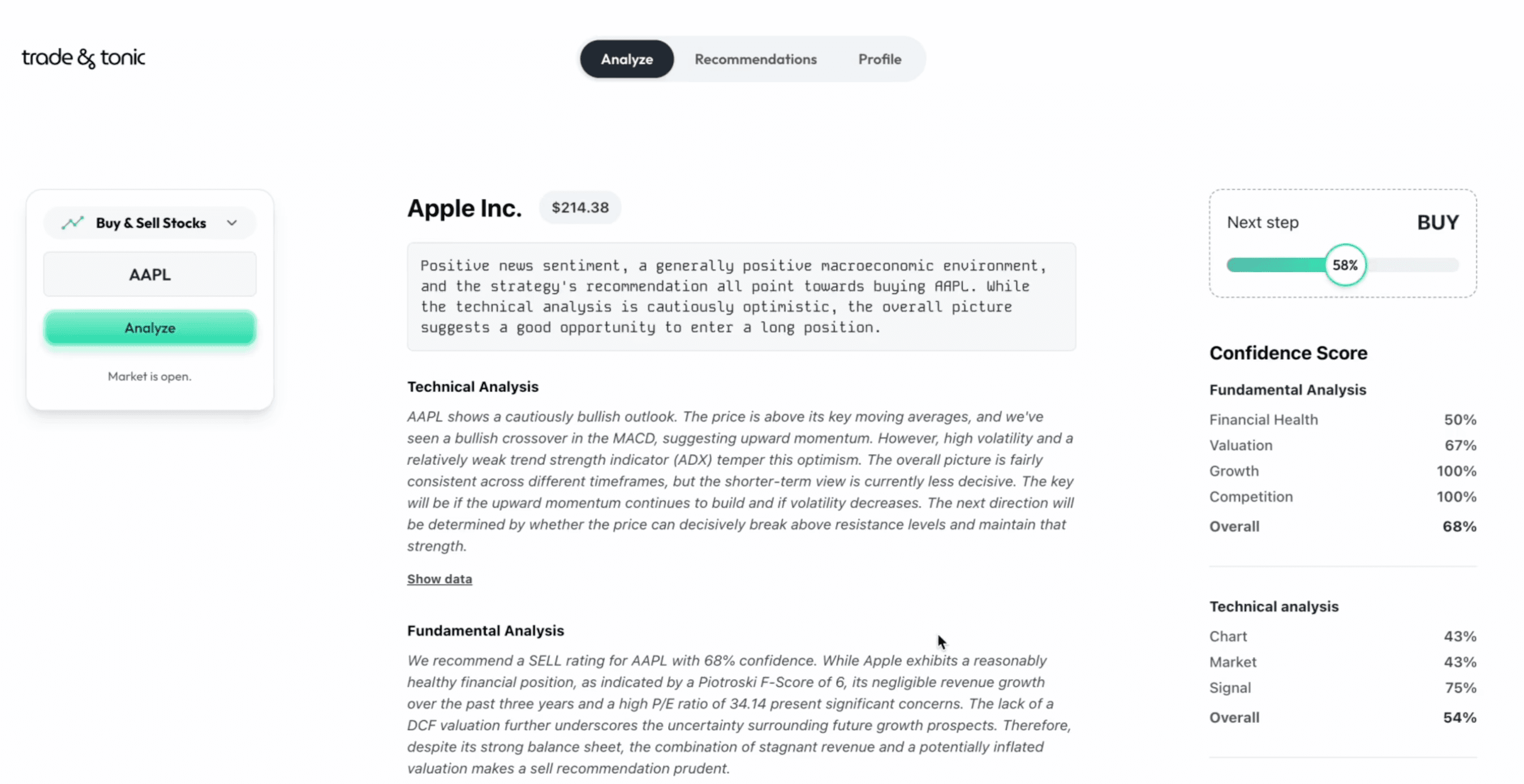

trade & tonic was built around a simple premise: bias cannot be eliminated, but it can be surfaced. Rather than producing a single opaque score, the platform uses multiple specialized AI agents that analyze different dimensions of a stock independently. Fundamentals, technical structure, news impact, peer context, and risk are evaluated separately before being combined.

Crucially, the system shows its work. Each insight includes an explanation of which signals are driving the view, how confident the system is, and how fresh the analysis is. When inputs conflict, that conflict is visible. When confidence drops, it is communicated explicitly.

This design choice reflects a deeper philosophy. AI should not replace human judgment. It should stress-test it.

By making assumptions visible and confidence measurable, the platform helps investors recognize when they are leaning too heavily on one narrative, one metric, or one regime.

AI as a debiasing tool, not an oracle

The most valuable role AI can play in investing is calibration.

Used correctly, AI can counteract overconfidence by showing uncertainty. It can reduce confirmation bias by presenting opposing signals. It can prevent recency bias by anchoring analysis in longer-term data. It can expose narrative bias by separating the story from numbers.

A 2023 report from McKinsey on AI in decision-making found that organizations using AI as a “challenge function” rather than a decision-maker improved outcomes significantly more than those that automated decisions outright.

In investing, the same principle applies.

The uncomfortable conclusion

AI models are teaching us how human markets actually work. They show that bias is not the exception. It is the baseline. And that the real edge comes from recognizing when it is influencing decisions.

In that sense, the most powerful AI systems are the ones that make uncertainty explicit. AI isn’t emotionless. It’s unbiased only when designed to be transparent. And for finance professionals willing to confront their own assumptions, that may be its most valuable lesson.

______________

trade & tonic is an intelligent investment analysis platform built for thoughtful investors who want to understand why a stock moves, not just whether it will go up or down. It combines advanced AI models with time-tested investing principles to deliver transparent, easy-to-understand insights that replace noise with clarity.

👉 Get Early Access

Learn more

Discover more from the latest posts.